I know why you’re here. You want to pass the Google Ads Measurement Certification, right? And you’re stuck on one of those tricky questions about experiments and measurement strategies. Don’t worry, you’re exactly where you need to be.

In this article on Miteart, I’ll break down a real exam question that often trips people up. Not only will I give you the correct answer, but I’ll also explain why it’s right, why the other options don’t work, and share a real-life example to help you remember it for the long haul.

If you’re managing campaigns with Google Ads and want to understand how incrementality testing adds unique value beyond simple A/B comparisons, then this lesson is for you. These concepts are key not just for the exam, but for running smarter, more effective campaigns in the real world.

So, no delay. Let me explore the question in full detail. Let’s begin.

Table of Contents

Question

How are incrementality experiments different from A/B experiments?

A) They measure the relative effectiveness of different versions of a marketing campaign.

B) They determine the impact of ads on a consumer’s decision to convert or not.

C) They typically require a smaller sample size and less sophisticated statistical analysis.

D) They both require a holdback group to determine which version of an ad performs better.

The correct answer

✅ B) They determine the impact of ads on a consumer’s decision to convert or not.

Why the correct answer is right

Incrementality experiments are designed to assess the true effect of advertising. Instead of just comparing two variations like an A/B test, incrementality studies ask a bigger question: Would a conversion have happened even without the ad?

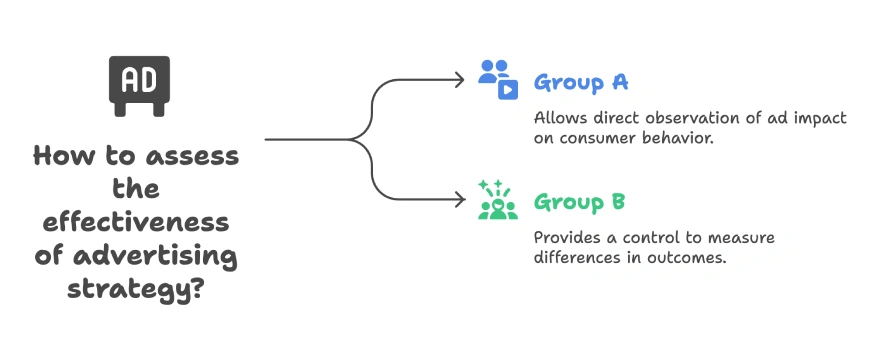

These experiments isolate the effect of an ad campaign by creating two groups:

- A test group that sees the ad.

- A holdback/control group that doesn’t see the ad.

By comparing the conversion behavior of these groups, advertisers can determine how many conversions were directly influenced by the ad (i.e., the ad’s incrementality).

This provides a clearer picture of actual ad impact, especially in cross-channel and multi-touchpoint environments where attributing conversions is complex.

Why the other options are wrong

I know you already found the right answer, and you might feel there’s no need to look further. But trust me, knowing why the other choices are wrong will help you understand better and avoid mistakes in the exam. So, let’s quickly go through them.

A) They measure the relative effectiveness of different versions of a marketing campaign.

Why it’s wrong:

This statement describes A/B experiments, not incrementality tests. A/B testing is designed to compare two or more versions of a campaign (e.g., different ad creatives, headlines, or landing pages) to see which one performs better.

Incrementality testing, on the other hand, focuses on whether the marketing effort itself is driving conversions at all, by comparing users who saw ads versus those who didn’t. It answers a deeper question: Would these conversions still happen without ads?

So, while A/B tests focus on variations, incrementality experiments focus on impact.

C): They typically require a smaller sample size and less sophisticated statistical analysis.

Why it’s wrong:

In reality, incrementality experiments often require larger sample sizes and more advanced statistical models compared to traditional A/B tests.

Why? Because measuring incremental lift involves isolating the true impact of ads from natural user behavior — something that’s not easily observable. This process requires:

- Control groups (holdouts),

- Randomized audience splitting,

- And often, modeling techniques like causal inference.

Meanwhile, A/B testing can often work with smaller groups and simpler metrics like click-through rate or conversion rate. So, this option incorrectly simplifies incrementality testing.

D): They both require a holdback group to determine which version of an ad performs better.

Why it’s wrong:

While both experiment types can include a holdback group (or control group), their purpose is different.

In A/B testing, the holdback is often just another version of the ad or experience — it’s still an ad. For example, Group A sees version 1 of a landing page; Group B sees version 2.

But in incrementality experiments, the holdback group is intentionally not exposed to any ads at all. This is critical. The purpose is to compare conversions between exposed vs. unexposed groups to determine how much lift (incrementality) the advertising is actually generating.

So, while both use holdbacks, incrementality is about ad exposure vs. no exposure, not just comparing creatives.

✅ Recap:

| Option | Why It’s Wrong |

|---|---|

| A | Describes A/B testing, not incrementality |

| C | Underestimates the complexity of incrementality testing |

| D | Confuses the type and purpose of holdback groups |

Only the correct answer reflects the true purpose of incrementality testing:

👉 To determine the actual impact of ads on conversion behavior.

Real-life example

Let’s say Sarah runs a digital ad campaign for her online pet supply store.

She wants to know whether her Facebook ads are actually driving sales, or if customers would buy anyway.

To find out, she sets up an incrementality experiment:

- Group A (test) sees her ads.

- Group B (holdback) is randomly selected to not see any ads.

After two weeks, she sees that Group A had 500 conversions, while Group B had only 300. This indicates her ads led to 200 incremental conversions she wouldn’t have had otherwise.

If she had just run an A/B test with different ad creatives, she wouldn’t know if either was really causing purchases or just being seen by already-intending buyers.

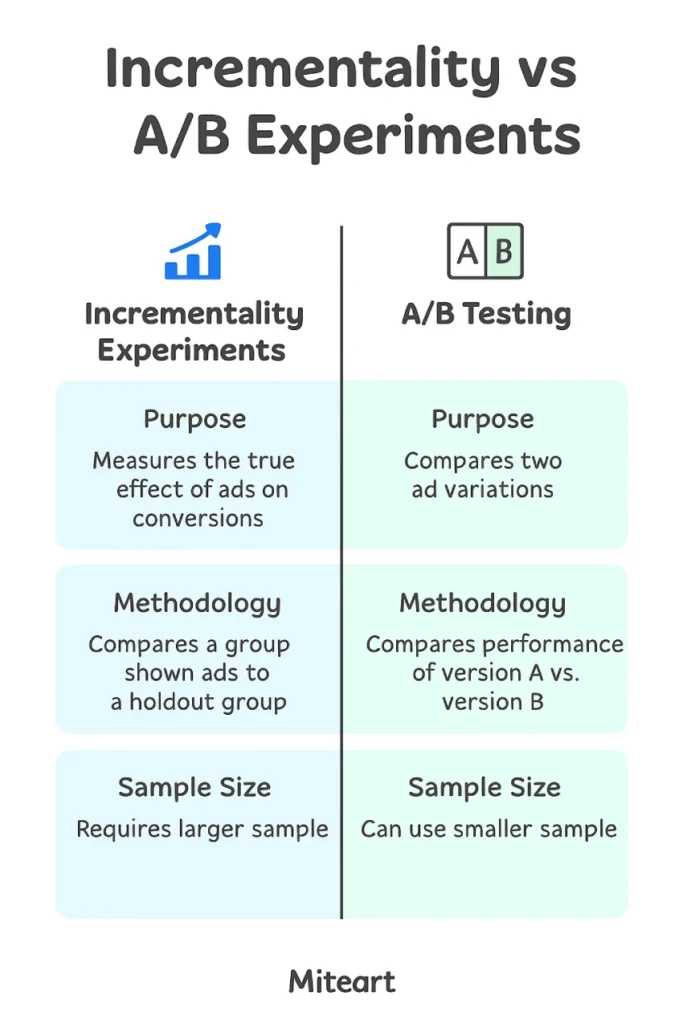

Incrementality Experiments vs. A/B Tests

| Feature | Incrementality Experiment | A/B Test |

|---|---|---|

| Goal | Measure true lift from ads | Compare two ad versions |

| Groups | Exposed group vs. control (no exposure) | Variation A vs. Variation B |

| Focus | Causality (Would they convert without ad?) | Performance difference between versions |

| Sample Size | Larger, needs statistical depth | Often smaller |

| Complexity | High (advanced modeling, holdout logic) | Lower (simpler comparisons) |

Resource links

- Google Ads Help – Incrementality Experiments

- A/B Testing vs. Incrementality

- Learn About Google Ads Measurement

Conclusion

Incrementality experiments go deeper than traditional A/B tests by helping advertisers understand true ad impact. Rather than just testing which version of an ad works better, incrementality asks, “Would this customer have converted without the ad?”

If you encounter this question on your certification exam, remember:

✅ Incrementality = measuring causal impact of ads.

Knowing this difference can help you manage your media budget better and confidently pass your certification.

I hope you understand the question and how to choose the right option. Now, if you are ready, you can take the exam on Skillshop – Google Ads Measurement Certification. If you want more real exam questions and answers like this one, which have already been covered, follow along. I’ll be breaking down more Google Ads Measurement Certification exam questions with full solutions in the next posts on Google Ads!

FAQs

Are incrementality experiments harder to run than A/B tests?

Incrementality experiments need more planning, complex setup, and advanced stats skills than regular A/B tests because they focus on measuring the real impact by carefully isolating cause and effect.

Can I use Google Ads to run incrementality tests?

Yes. Google offers tools for running incrementality tests, like lift measurement and holdout experiments. These help advertisers measure the true effect of their ads by comparing groups that saw the ads with those that didn’t.

What kind of business should use incrementality tests?

Advertisers who want to know the true impact of their ads should use incrementality tests, especially if customers interact with many touchpoints. These tests are most helpful for businesses with large budgets or complex, multi-channel setups.

Is it necessary to always run incrementality tests?

Not always. Use incrementality experiments mainly to check costly campaigns or when current attribution methods don’t clearly show the real impact of ads. Save these tests for times when being sure is important.